“The approach to optimise a client scheduler”

After a first big achievement, our client requested us to take a look into their batch scheduler, where the batch jobs are automatically started. The goal was to have the daily and monthly batches taking less than 6 hours.

The daily batch was taking almost 24 hours (sometimes more) and the monthly was taking over 30.

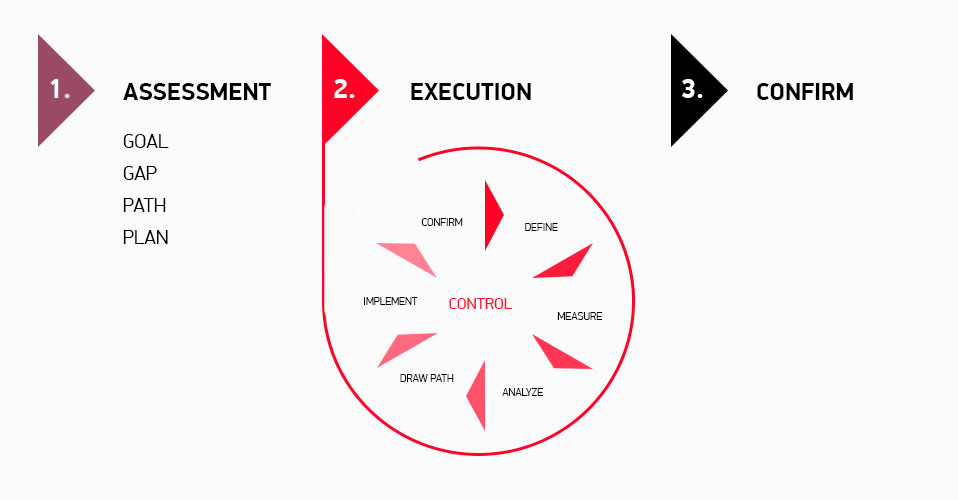

After requesting the required accesses (scheduler tool, involved databases, batch servers, etc.), we worked to have a stable solution to help us measure the longest critical path for the daily and monthly jobs, to be less prompt to manual errors that could be used for the long duration of the project. We have been using it for almost 2 years now.

Soon we encountered the first obstacle. The client’s measurement method of the monthly batch was different from the way we found to be the most assertive. At that moment, the JOINER part of ourselves came in evidence. We proposed to have a meeting to reach an agreement on the way to measure the critical path. The agreement was reached, fairly peacefully.

By then we were ready to define the problem, by answering the question: What are the top contributors for the long duration of the batches?

Whenever multiple paths are involved, the first path to be addressed is usually the critical path. Critical path is the path with the biggest cost. In this case the cost is time.

“By Performing to perfection, we create Time”

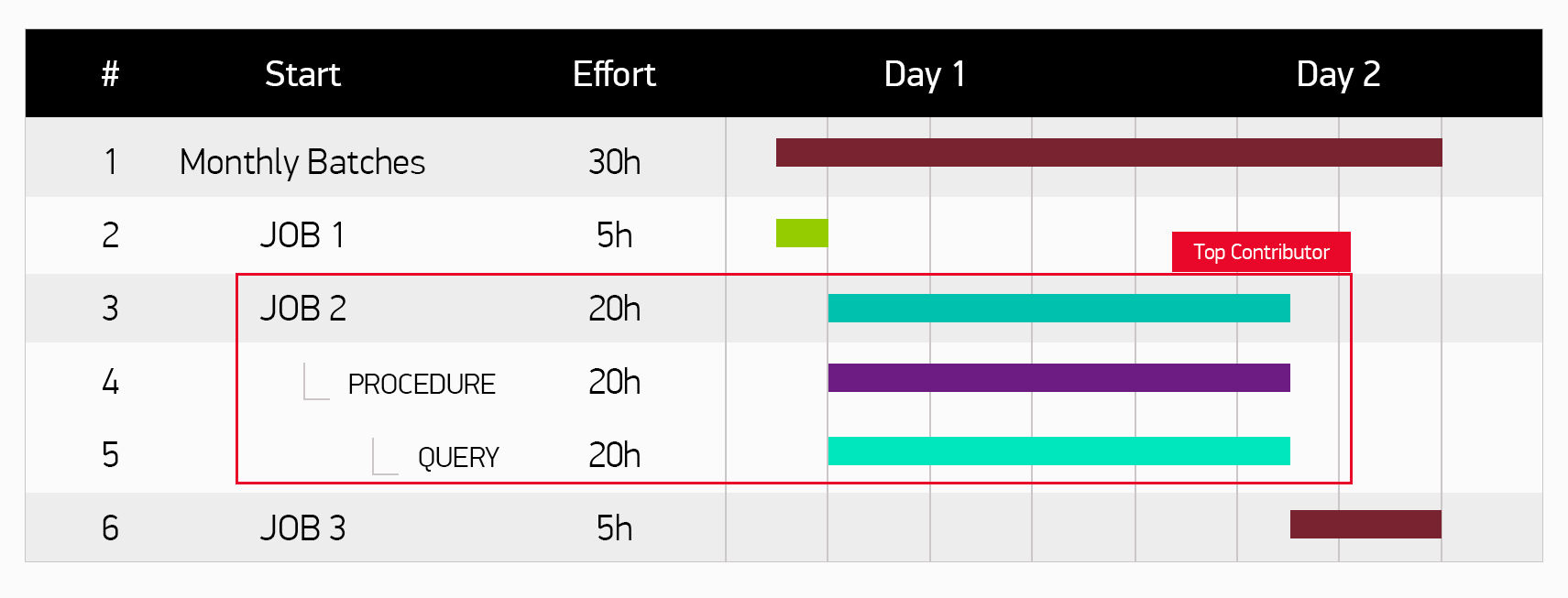

The problem definition step should be done till the most atomic part as possible. In a first step, the longer jobs should be identified, and then start to look inside the longest jobs for the top contributor there. As the Matryoshka dolls, we have to keep unravelling until the innerst top contributor is identified.

The definition of the problem can be more than half the effort to have a solution.

After we identified all the top contributors for the daily and monthly batches, we started addressing each of them to have clear recommendations for performance improvement.

For example, in the critical path, the longest job was identified, which we pinpointed a database procedure call, on which a query was executed 1 million times. The sum of the duration of elapsed time of this query made the total time of job. So, the root-cause for the duration of this specific job is the query that is executed 1 million times.

What could be one solution? Improving the response time of the query, redesigning the job to avoid doing 1 million executions, executing in parallel…

Many different approaches were used to address the panoply of performance issues that were analysed. Over 60 changes implemented later, the goals for the daily batch were achieved and we are well on track to achieve the monthly goals in the short-term.

This is one case when Crossjoin’s resilience profile came in evidence, to have always found a solution and to have never given up on our common goals with the client.

Article by:

Paulo Maia

Performance Consultant

Recent Comments